Challenging the Bing-It-On Challenge

Did you find this blog post through Bing? Probably not — 67% of worldwide searches go through Google, 18% through Bing. But Microsoft has advertised in a substantial TV campaign that — in the cyber analog to blind taste-testing — people prefer Bing “nearly 2:1.” A year ago, when I first saw these ads, the 2-1 claim seemed implausible. I would have thought the search results of these competitors would be largely identical, and that it would be hard for people to distinguish between the two sets of results, much less prefer one kind 2:1.

When I looked into the claim a bit more, I was slightly annoyed to learn that the “nearly 2:1” claim is based on a study of just 1,000 participants. To be sure, I’ve often published studies with similarly small data sets, but it’s a little cheeky for Microsoft to base what might be a multi-million dollar advertising campaign on what I’m guessing is a low-six-figure study.

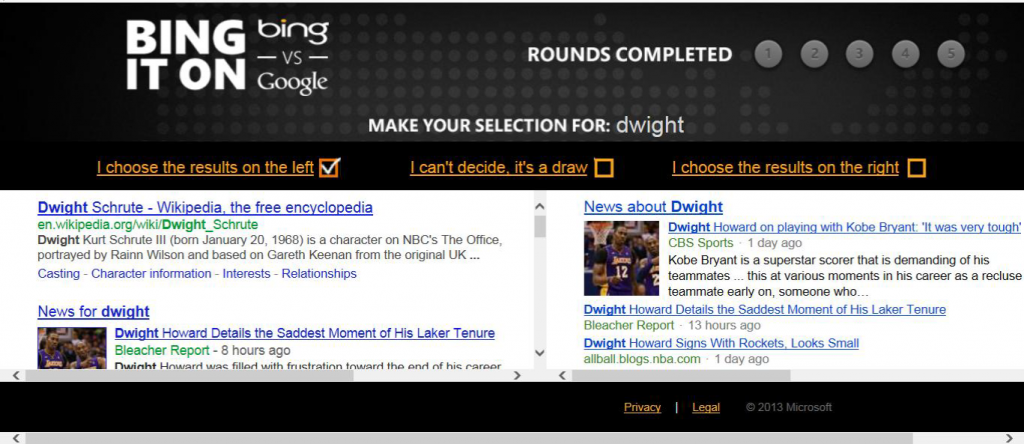

To make matters worse, Microsoft has refused to release the results of its comparison website, BingItOn.com. More than 5 million people have taken the Bing-It-On challenge – which is the cyber analog to a blind taste test. You enter in a search term and the Bing-It-On site return two panels with de-identified Bing and Google results (randomly placed on the right or left side of the screen). You tell the site which side’s results you prefer and after five searches the site reveals whether you prefer Bing or Google (see below).

Microsoft’s ads encourage users to join the millions of people who have taken the challenge, but it will not reveal whether the results of the millions are consistent with the results of the 1,000.

So together with four Yale Law students, I set up a similar-sized experiment using Microsoft’s own BingItOn.com site to see which search engine users prefer. We found that, to the contrary of Microsoft’s claim, 53 percent of subjects preferred Google and 41 percent Bing (6 percent of results were “ties”). This is not even close to the advertised claim that people prefer Bing “nearly two-to-one.” It is misleading to have advertisements that say people prefer Bing 2:1 and also say join the millions of people who’ve taken the Bing-It-On challenge, if, as in our study, the millions of people haven’t preferred Bing at a nearly a 2:1 rate. Microsoft might have realized this and has more recently altered its advertising to back off their original claim to just say that people “prefer” Bing.

We also interjected a bit of randomness into our study to test whether the type of search term impacts the likelihood that Bing is preferred. We randomly assigned participants to search for one of three kinds of keywords: Bing’s suggested search terms, popular search terms, and self-suggested search terms. When Bing-suggested search terms were used the two engines statistically tied (47% preferring Bing vs. 48% preferring Google). But when the subjects in the study suggested their own searches or used the web’s most popular searches, a sizable gap appeared: 55-57% preferred Google while only 35-39% preferred Bing. These secondary tests indicate that Microsoft selected suggested search words that it knew were more likely to produce Bing-preferring results. You can read our full paper here.

The upshot: several of Microsoft’s claims are a little fishy. Or to put the conclusion more formally, we think that Google has a colorable deceptive advertising claim against Microsoft. It could be worth a lot of money on lost ad revenue if the claims misled people into thinking that a substantial majority of people prefer Bing over Google. Then again, I might be a little over zealous in seeing Lanham Act violations. Back in 2010, I suggested that the movie Date Night might contain a deceptive Kindle ad.

Comments